ICCV Workshop on Computer Vision for the Factory Floor

October 17, 2021, 0800-1200 EST.

Visual defect detection and visual measurements in manufacturing were amongst the first practical applications of computer vision. Since then, applications in manufacturing have continued to leverage advances in computer vision, and the sector has been a consistent source of funding to support our community’s research at various levels.

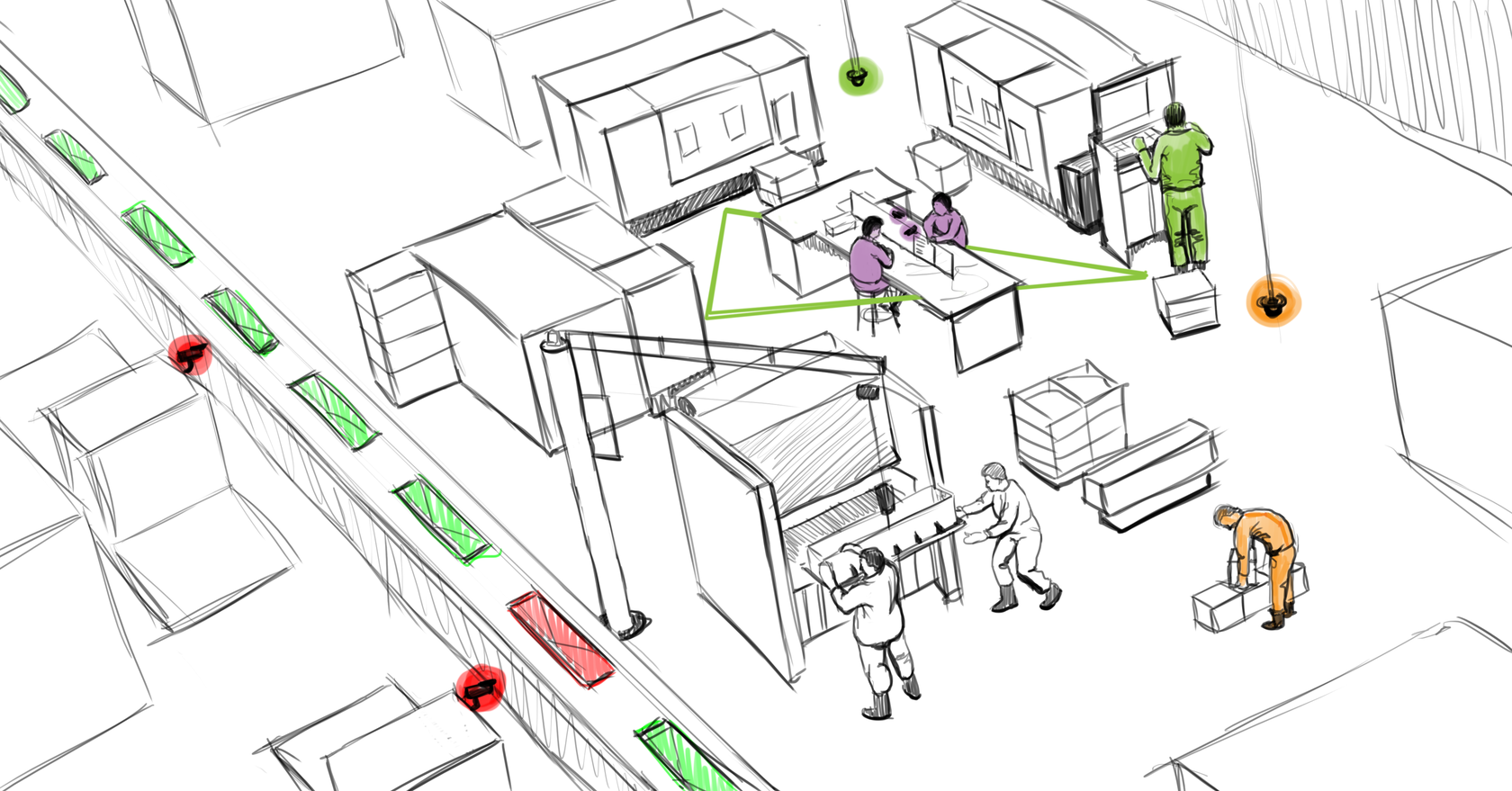

With the recent deep learning revolution, several novel applications of computer vision and augmented reality have emerged in manufacturing, such as in human activity recognition for understanding manual assembly work, as well as a myriad of applications in robotics. With this workshop, we bring together computer vision researchers and leaders from academia and industry for exchange of ideas that lie at the intersection of computer vision and the smart factory.

Our Speakers

0805 – 0835 EST

0805 – 0835 EST

Dr. Nils Petersen

From AR Work Instructions to Assistance. The delivery of work instructions that naturally integrate into a frontline worker's field of view has been a salient application of AR. Leveraging AI and Computer Vision allows going beyond sole spatial awareness, capturing the user's context more comprehensively. A generic approach to this Cognitive AR is discussed from an application-centric viewpoint. The talk also covers aspects of authoring incorporating information sources like CAD-data, authored instructions, and runtime observation of the procedure. Features and properties are motivated through industry use case examples.

0835 – 0900 EST

0835 – 0900 EST

Dr. Jamal Afridi

A Computer Vision View from a Global Manufacturing Company. This talk will discuss computer vision systems in the context of different types of manufacturing processes. We will discuss examples of image-based computer vision in factories as well as those requiring spatio-temporal solutions. At the end, we will also demo how 3M leverages some of its manufactured films to help create robust computer vision systems.

0900 – 0930 EST

0900 – 0930 EST

Nandini Ananthula

Pain points in manufacturing. We are in the 21st century and yet a lot of our manufacturing processes are manual. Industry 4.0 has been a buzzword for a long time, but real-world implementation is nowhere seen. The extent of manual intervention needed in factories holds back effective collaboration. This talk will give an overview of the pain points in manufacturing and how computer vision can help boost the product output and reliability. Starting with an introduction to Industrial Engineering, the talk will provide some insight on some automation examples and explore a few ongoing manufacturing issues that could use applications of computer vision and augmented reality.

0930 – 1000 EST

0930 – 1000 EST

Dr. Behnoosh Parsa

Computer Vision Applications in Ergonomics. Recently there has been a lot of interest in using computer vision to automate ergonomics risk assessments. These assessments are time-consuming and should be performed by an expert in the field to maintain an acceptable level of consistency. Automation of such a process saves time, reduces costs, and allows companies to scale monitoring of their workplace. This talk will discuss various areas in ergonomics that can benefit from a computer vision solution and the challenges ahead in designing them.

1000 – 1030 EST

1000 – 1030 EST

Dr. Bugra Tekin

Computer Vision and Mixed Reality for Factories and Frontline Workers. There has been a tremendous increase in the interest and the application areas of computer vision over the past decades. Specifically, for factories and manufacturing scenarios, computer vision has found application areas in visual inspection, warehouse picking, lean manufacturing and task guidance. This talk will give an overview of the computer vision and mixed reality solutions of the Microsoft Mixed Reality team for manufacturing environments. We will further discuss our research on computer vision for factory floors and frontline workers, and, in particular, our studies on data collection, robotics, few-shot transfer learning, egocentric action recognition and hand-object interactions.

1030 – 1100 EST

1030 – 1100 EST

Dr. Quoc-Huy Tran

Unsupervised/Semi-Supervised Temporal Activity Segmentation for Improving Frontline Productivity. Temporal activity segmentation plays an important role in many computer vision applications where videos of semi-repetitive tasks are available. These applications include frontline worker training, task guidance, and warehouse process optimization amongst others. Despite high accuracy, supervised temporal activity segmentation approaches require per-frame labels which can be prohibitively expensive. In this talk, I will introduce our recent efforts at Retrocausal in unsupervised/semi-supervised temporal activity segmentation. I will first present our self-supervised method for learning video representations by aligning videos in time. The learned representations are useful for various tasks such as temporal video alignment, which can be applied for transferring labels from a labeled video to an unlabeled one. Next, I will introduce our work on unsupervised temporal activity segmentation. Given a collection of unlabeled videos, our method simultaneously discovers the actions and segments the videos. Lastly, I will present our latest work on semi-supervised temporal activity segmentation, which provides a reasonable tradeoff between annotation requirement and segmentation accuracy.

1100 – 1130 EST

1100 – 1130 EST

Dr. Rahul Bhotika

Industrial Computer Vision at Scale: Practical Considerations and Research Challenges. The industrialization and adoption of machine learning and computer vision at scale presents several new practical considerations and research challenges. One such challenge is in enabling users, particularly non-ML developers/scientists, to bring their own data and solve their specific problem, the so-called "long tail" of computer vision, in a self-serve, automated manner. Another is in operating and improving computer vision services at scale across diverse use-cases and environments. This talk describes our recent research results towards addressing these challenges, including learning task embeddings, improving models over time with feedback, few-shot methods, regression-free model updates, and model compatibility and performance optimization across platforms.

1130 – 1200 EST

1130 – 1200 EST

Dr. Cordelia Schmid

Structured Video Representations. Understanding videos and predicting actions is key for factories and manufacturing. We start by presenting an approach for localizing spatio-temporally actions. We describe how action tublets result in state-of-the-art performance for action detection and show how modeling relations with objects and humans further improves the performance. Next we introduce an approach for behavior prediction for self-driving cars. The approach VectorNet is a hierarchical graph neural network that first exploits the spatial locality of individual road components represented by vectors and then models the high-order interactions among all components.